Why Genealogists Should Be Careful With AI Photo “Restoration”

In recent months, more genealogists have begun warning about AI-edited historical photographs. They are right to do so.

Early image generators such as Midjourney and DALL·E made this issue visible. Today, newer tools marketed specifically for photo enhancement and restoration, including products like Nano Banana Pro, Stable Diffusion–based apps, Google’s image models, and Adobe Firefly, have made AI-generated imagery easier to produce and much harder to recognize.

The problem is not that these tools exist. The problem is how they work.

When you upload an old photograph and ask an AI system to “enhance,” “repair,” or “restore” it, the software does not recover lost or damaged pixels from the original image. It analyzes what remains, then generates new visual information based on patterns learned from millions of other photographs. The output may look clean, realistic, and emotionally compelling, but many of its details are guesses.

Features such as eye color, skin tone, facial structure, clothing, background, expression, and even age lines may be altered or entirely invented. What you are seeing is not a recovered historical photograph. It is an illustration of what the AI believes the subject might have looked like.

That distinction matters in genealogy.

Unlabeled AI-generated “restorations” are already appearing in online trees, hint systems, and social media posts. Once these images circulate without explanation, future researchers may assume they are original records. Over time, synthetic images can quietly replace authentic evidence and distort the historical record.

This makes it increasingly important to pause when you encounter a flawless, glowing “restored” historical photo. Ask whether the image represents documented history or a modern re-imagining. As AI imagery becomes more realistic, the line between evidence and invention becomes easier to cross without noticing.

In response to these risks, groups such as the Coalition for Responsible AI in Genealogy have offered clear, practical guidance:

Always label AI-modified images

Always cite the original source

Use AI images as illustration, not evidence

These are not limitations. They allow genealogists to enjoy modern tools while preserving the integrity of historical research.

I have seen firsthand why this matters.

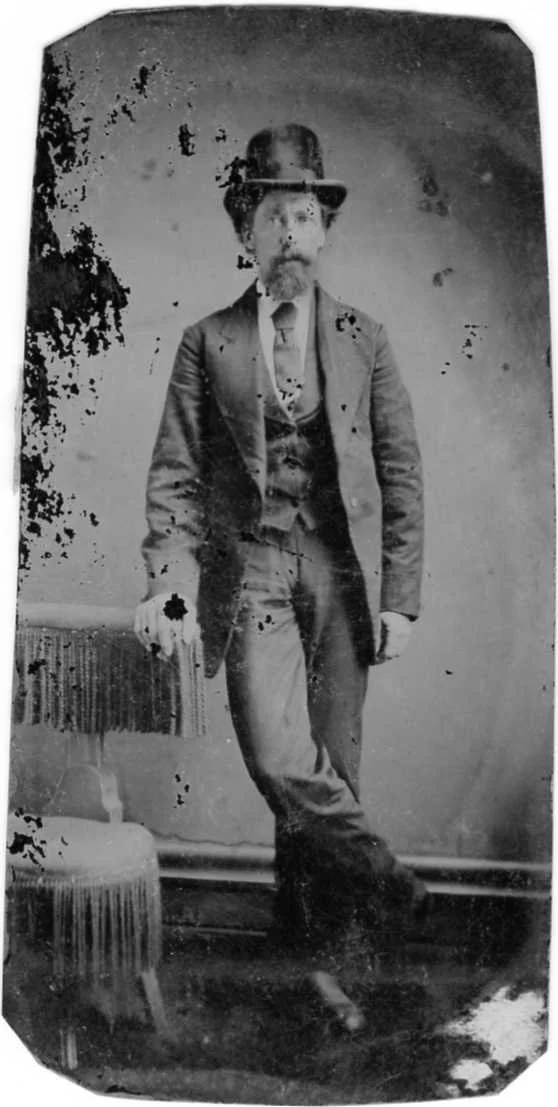

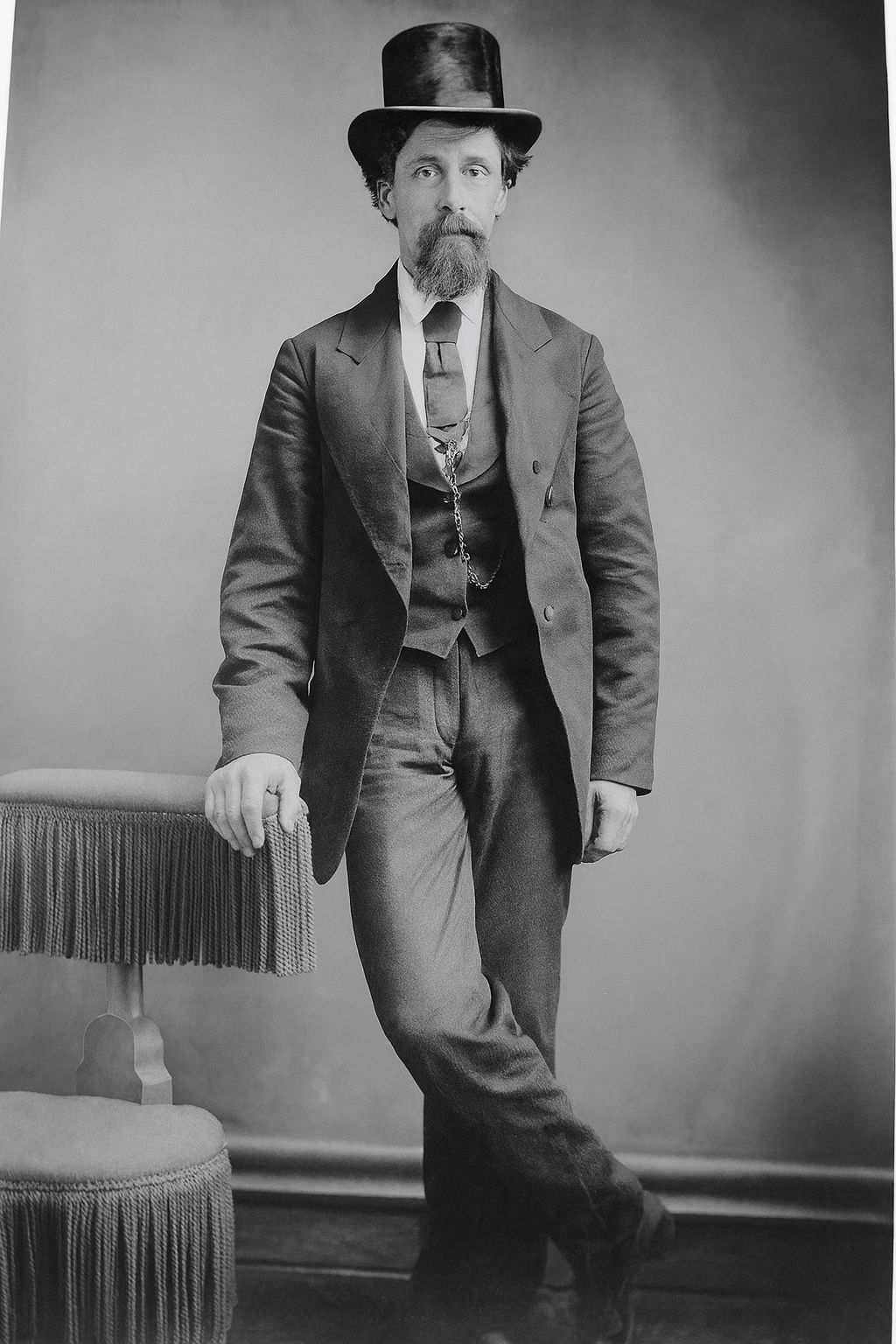

A cousin has this scratched tintype of a relative. I asked an AI tool to fill in only the damaged areas. Instead of repairing the scratches, the system generated a completely new, pristine portrait. It looked impressive. It also was no longer my ancestor’s face.

The result was an AI interpretation, convincing, polished, and entirely invented.

This is the key point genealogists need to understand: generative AI always generates. Even small, well-intentioned requests trigger the creation of new pixels, new features, and new assumptions. If those changes are not disclosed, they can quietly overwrite authentic history.

AI can be a powerful tool for genealogy when used responsibly. It can help illustrate stories, improve legibility, and support education. But photographs are evidence. Once altered without clear labeling, they stop being records and become artwork.

If you would like help researching, documenting, and preserving your family history with care and proper standards, I offer personalized genealogy research services.